Using Microsoft Azure’s Data Factory you can pull data from Amazon S3 and Google Cloud Storage to extract into your data pipeline (ETL workflow). However, Microsoft does not allow you to load (put/upload) files back into these platforms at the end of your extract, transform, load cycle.

Couchdrop and cloud storage

To get past this and to enable the ability to load files back into these platforms you can utilize the SFTP connector and Couchdrop. Couchdrop is a cloud SFTP / FTP conduit that acts as a fabric on top of cloud storage and offers webhooks, an API and supports web portal uploads, etc.

In this case Couchdrop supports Google Cloud Storage, Amazon S3, SharePoint, Dropbox, and anything in between. Using Couchdrop with Azure Data Factory you can pull data from any cloud storage platform, transform it and then load it to the same or a different cloud platform, all through SFTP.

ETL Use Case Examples

- As a vendor your clients send you files via SFTP (or another means such as web portal) where you can receive a webhook event on upload to then initiate your ETL process.

- Have your clients send you data via SFTP to then be processed through automated ETL operations.

- As a client you can expose your data to your vendor for them to then process the uploaded file on a webhook event.

Using Couchdrop with Azure Data Factory

Configuring Couchdrop is straightforward and only takes a couple of steps. You can create users who are locked to specific buckets and are limited to specific file operations (upload only, download only, read/write, etc.). You can also configure webhooks based on upload/download events on certain folders. This enables you to trigger different workflows based on the uploaded folder and user. Couchdrop also offers an API to assist with onboarding users programmatically.

To get up and running with Couchdrop’s cloud SFTP server and integrate it into your ETL processes, follow these steps:

- Step 1. Configure storage in Couchdrop

- Step 2. Configure user(s)

- Step 3. Configure webhooks (optional)

- Step 4. Configure Couchdrop’s SFTP in Data Factory

Step 1. Configure storage in Couchdrop

Navigate to your storage portal and configure a new storage connector. Below we are configuring Google Cloud Storage.

Step 2. Configure user(s)

Next, configure a user. When making the user, be sure to isolate them to the folder configured above (/Google Cloud in our case). In theory this could be an external party uploading data. You could create another user who has read/write access who can pull the data down based on the webhook event of the user uploading a file and extract it into your workflow.

Step 3. Configure webhook (optional)

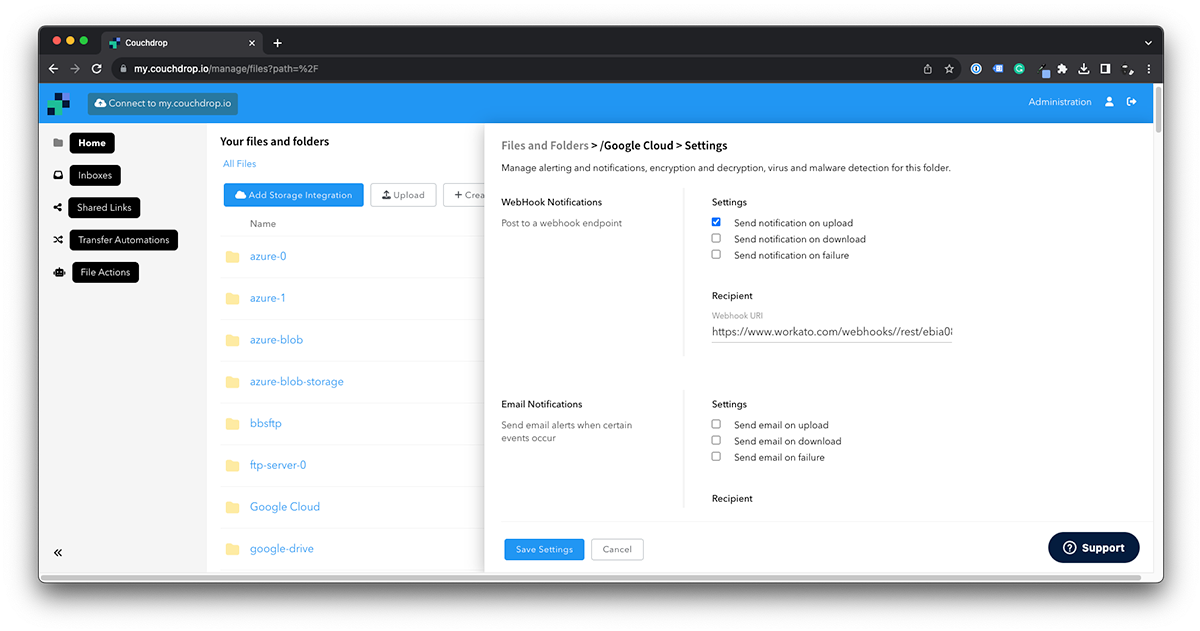

Under the specific folder in Couchdrop’s SFTP virtual file system you wish to send a webhook on — select the event you wish the webhook to be sent on and the URL and save.

Sample Couchdrop SFTP webhook output:

Step 4. Configure Couchdrop’s SFTP in Data Factory

As Couchdrop SFTP works as a standard SFTP server, you simply need the hostname (sftp.couchdrop.io) and your Couchdrop SFTP’s user credentials.

On a final note, Couchdrop is simply a conduit and does not store data, nor does it ‘sync’ data to storage platforms. It processes transfers in memory directly to your endpoint which is overwritten.

Want to try Couchdrop for yourself? You can get a 14-day free trial with no credit card and no required sales calls or other hoops to jump through. Simply sign up and go. Start your trial today.